A technique that allows robots to estimate the pose of objects by touching them

Humans are able to find objects in their surroundings and detect some of their properties simply by touching them. While this skill is particularly valuable for blind individuals, it can also help people with no visual impairments to complete simple tasks, such as locating and grabbing an object inside a bag or pocket.

Researchers at Massachusetts Institute of Technology (MIT) have recently carried out a study aimed at replicating this human capability in robots, allowing them to understand where objects are located simply by touching them. Their paper, pre-published on arXiv, highlights the advantages of developing robots that can interact with their surrounding environment through touch rather than merely through vision and audio processing.

“The goal of our work was to demonstrate that with high-resolution tactile sensing it is possible to accurately localize known objects even from the first contact,” Maria Bauza, one of the researchers who carried out the study, told TechXplore. “Our approach makes an important leap compared to previous works on tactile localization, as we do not rely on any other external sensing modality (like vision) or previously collected tactile data related to the manipulated objects. Instead, our technique, which was trained directly in simulation, can localize known objects from the first touch which is paramount in real robotic applications where real data collection is expensive or simply unfeasible.”

As it is trained in simulations, the technique devised by Bauza and her colleagues does not require extensive data collection. The researchers initially developed a framework that simulates contacts between a given object and a tactile sensor, thus assuming that a robot will have access to data about the object it is interacting with (e.g., its 3-D shape, properties, etc.). These contacts are represented as depth images, which show the extent of an object’s penetration into the tactile sensor.

Subsequently, Bauza and her colleagues used state-of-the-art machine-learning techniques for computer vision and representation learning to match real tactile observations gathered by a robot with the set of contacts generated in simulation. Every contact in the simulation dataset is weighed depending on the likelihood that it matches the real or observed contact, which ultimately allows the framework to attain the probability distribution over possible object poses.

“Our method encodes contact, represented as depth images, into an embedded space, which greatly simplifies computational cost allowing real-time execution,” Bauza said. “As it can generate meaningful pose distributions, it can be easily combined with additional perception systems. In our work, we exemplify this in a multi-contact scenario where several tactile sensors simultaneously touch an object, and we must incorporate all these observations into the object’s pose estimation.”

Essentially, the method devised by this team of researchers can simulate contact information simply based on an object’s 3-D shape. As a result, it does not require any previous tactile data gathered while closely examining the object. This allows the technique to generate pose estimates for an object from the first time it is touched by a robot’s tactile sensors.

“We realized that tactile sensing can be extremely discriminative and produce highly accurate pose estimations,” Bauza said. “While vision will sometimes suffer from occlusions, tactile sensing does not. As a result, if a robot contacts a part of an object that is very unique, i.e., no other touch on the object would look similar to it, then our algorithm can easily identify the contact and thus the object’s pose.”

As many objects have non-unique regions (i.e., the way in which they are positioned can result in very similar contacts), the method developed by Bauza and her colleagues predicts pose distributions, rather than single pose estimates. This particular feature is in stark contrast with previously developed approaches for object pose estimation, which tend to only gather single pose estimates. Moreover, the distributions predicted by the MIT team’s framework can be directly merged with external information to further reduce uncertainty about an object’s pose.

“Notably, we also observed that combining several contacts simultaneously, as it happens when using several fingers to contact an object, rapidly decreases any uncertainty on an objects’ pose,” Bauza said. “This validates our intuition that adding contacts on an object constrains its pose and eases estimation.”

In order to assist humans in their daily activities, robots should be able to complete manipulation tasks with high precision, reliability and accuracy. As manipulating objects directly implies touching them, developing effective techniques to enable tactile sensing in robots is of key importance.

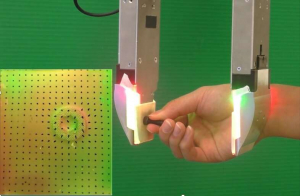

“The ability to sense touch has recently received great interest from industry, and our work achieves this via a combination of three factors: (1) a high-resolution but inexpensive sensing technique based on using small cameras to capture the deformation of a touch surface (e.g., GelSight sensing); (2) recent compact integration of this sensing technique into robot fingers (e.g., GelSlim fingers); (3) and a computational framework based on deep-learning to process effectively the high-resolution tactile images for tactile localization of known parts (e.g., this work),” Alberto Rodriguez, another researcher involved in the study, told TechXplore. “This type of technology is becoming mature and the industry is seeing the value for automating tasks that require precision such as in assembly automation.”

The technique devised by this team of researchers allows robots to estimate the pose of objects they are manipulating in real-time, with high levels of accuracy. This gives a robot the chance to make more accurate predictions about the effects of its movements or actions, which could enhance its performance in manipulation tasks.

To work, the method created by Bauza and her colleagues requires some information about the shape of the object that a robot is manipulating. Therefore, it may prove particularly valuable for implementations in industrial settings, where manufacturers assemble items based on a clear model of their shapes.

In their future work, the researchers plan to extend their framework so that it also incorporates visual information about objects. Ideally, they would like to turn their technique into a visuo-tactile sensing system that can estimate the pose of objects with even greater accuracy.

“Another ongoing work of ours deeply related to this approach aims at exploring the use of tactile perception for complex manipulation tasks,” Bauza said. “In particular, we are learning models that allow a robot to perform accurate pick-and-place operations. The goal is to find object manipulations that not only aim at stable grasps but also aid perception. By using our approach, we can also target grasps that result in discriminative contacts which will improve tactile localization.”

Original article published on TechXplore: https://techxplore.com/news/2021-01-technique-robots-pose.html